Finding, Recruiting, and Screening for User Research Participants for Startups

Part 1: Building Faster Feedback Loops Using Qualitative User Research

One method we’ve found very useful for testing ideas is conducting 1:1 qualitative user research interviews with about five participants. For this approach to be effective, we need to be able to spot patterns within this group, e.g. three or more participants share a reaction or two have a strong and highly similar reaction. These patterns should represent generalizable insights that we believe will apply to our target market, which this smaller set of participants accurately represents.

A crucial early step in qualitative user research is determining who specifically to talk to - who will provide relevant, representative feedback that leads to larger learnings? If you don’t talk to a group that you’re confident represents a broader range of opinions, your conclusions are not generalizable and it becomes difficult to decide on what to do next or how to apply your learnings.

What if you don’t know who your ideal customer profile or target market is?

If you're pre-product in the inception or idea stage, you might not have a well-defined target audience or ideal customer profile (ICP) yet. Or your current definition might lack specificity and detail. Don't panic! This is common in the early stages.

However, this can often lead to the instinct to throw a spaghetti-like mess of ideas at the wall to see what sticks. “I have a great idea,” you might think, and “if my friends and family say they like it then it must be great and I’m on the right track.” Wrong!

This is a dangerous trap, described extensively in The Mom Test. Your friends and family are likely biased towards being kind and positive. More importantly, unless they share specific identifying traits, they don't represent a replicable group of early users.

Even if they genuinely love what you're building, you'll struggle to find your next users once you exhaust your personal network if you don't know what characteristics to look for instead of randomly approaching potential customers.

People have a huge range of needs, wants, habits, and existing tools and you are not likely to succeed or learn quickly by taking a random approach. Instead, you’re relying on luck. While luck matters, I’ve found as a founder that I am more likely to “get lucky” through tight learning loops where I test specific hypotheses with particular groups who share common traits. This approach allows me to validate or invalidate ideas faster and apply insights to subsequent iterations.

Even for consumer applications, where theoretically your market might one day be “everyone with a smartphone” (e.g. for a mobile social app) you still benefit from narrowing down in the early stages to a specific hypothesis about your earliest adopters. What are the characteristics that make someone likely to be one of the first to try your product?

For a business targeting enterprise customers or developers, you might need to segment into distinct groups: your buyer and user might be different people, and your organizational champion might be yet a different stakeholder.

How do you decide who to talk to?

For each persona, identify their key attributes to clearly visualize who they are - and who they aren't. If this feels overwhelming, start by prioritizing just one group.

I typically start with the group I have the strongest hypothesis about and/or the group I expect will provide the most valuable insights through user research interviews.

In his blog post “How to find great participants for your user study”, Michael Margolis shares a useful worksheet for creating user research participant screeners that I’ve also found helpful in defining my ICP for research sessions.

While you don't need to use this exact worksheet, you should develop a clear sense of the defining characteristics that will help you identify appropriate participants for your user research interviews.

Recent Example: CodeYam Landing Page Research

At CodeYam, the startup I co-founded with Jared Cosulich, we're developing a new category of tools for software developers and their teams. Our product, called 'software simulation,' combines several technologies including generative AI and static code analysis to create a new application.

A key challenge is that while 'software simulation' is a new term, it most accurately describes what we're building. More familiar terminology would lead to incorrect assumptions about our product.

Our terminology is inherently ambiguous to anyone not intimately familiar with our team and product. As an early-stage startup launching a new product, that currently includes most people.

We aim to be precise in how we define this new tool for software builders and communicate its value, particularly for understanding and testing code changes, whether they're AI-generated, manually coded, or a combination of both.

We decided to run several landing page tests for CodeYam to refine how we describe our product and communicate its value.

Before this, I had attended several industry events (including Google Cloud Next, GitHub Universe, the Female Founder Retreat, Boldstart Founder Summit, and local AI-focused meetups for founders and developers in Boulder and Denver). These conversations generated new ideas for describing and positioning CodeYam, based on my impromptu experiments with different explanations and noting who showed interest.

My cofounder and I also explored extracting part of our core engine into a standalone product. This approach would let developers experience CodeYam earlier through a playground environment or a focused tool for automatically generating mock data, which could be a significant time-saver for development teams.

For our user research sessions for the landing page, this is what we decided on for our criteria:

We used our own variation of Jake Knapp’s Design Sprint FigJam Template to stay organized.

Once you’ve defined what traits to include / exclude, there are two crucial next steps:

Figure out where you'll recruit participants from

Establish how you'll screen responses

Figure out where you’ll recruit participants from

This approach varies significantly depending on your startup's stage and whether you're pre- or post-product-market fit.

If you have existing customers and usage data, these can effectively pre-screen candidates for user research. For instance, I frequently receive emails from larger tech companies (usually from product managers or dedicated researchers) seeking feedback from early-stage founders like me who have used specific features or match certain behavior patterns. They can identify potential research participants based on their usage data and contact us via our registration emails. Many of these companies add an additional screening layer, such as a brief survey, to verify eligibility for specific research initiatives.

As a brief aside, I love participating in these sessions. Providing feedback on products I regularly use (or have churned from) is as enjoyable for me as it is valuable for their teams. As a bonus, I learn firsthand how leading high-growth companies design and conduct their user research. I can adopt effective methodologies for CodeYam and note what approaches to avoid when sessions feel cumbersome.

At Lyft, I worked with an exceptional UX Research team within the Design organization built and led by Katie Dill. Collaborating closely with this team, I witnessed firsthand their invaluable contributions to ensuring we gathered feedback from relevant users, prioritized effective product and marketing strategies, and learned from every launch. Their research helped us mitigate risks before building and launching innovative features, for products ranging from the rider app itself to new products like electric bikes and scooters to transit payment systems.

Sometimes we utilized external vendors such as User Testing. While expensive, these services save significant time when you have clearly defined participant criteria, need rapid prototyping and research iterations, and prefer not to handle participant recruitment yourself.

As an early-stage startup, our approach differs significantly. Rather than spending substantial money on user research, we focus on staying scrappy by handling our own recruitment and screening.

In this scenario, identifying where your target users already gather and reaching them there becomes even more crucial. Adopt an 'always recruiting' mindset to maintain a pipeline of eligible participants ready for any upcoming research cycle.

For CodeYam, given our criteria, we decided to recruit from the following platforms:

Craigslist (specifically in the SF jobs and computers gigs sections). While results vary, we've found eligible participants here previously. With a well-designed screener that's difficult to game, this platform can yield good participants.

Social media posts primarily targeting second-degree connections and non-connections matching our feedback criteria. I focused primarily on LinkedIn and X, and have recently expanded to Bluesky and Threads.

Selective posting in communities with high concentrations of our target audience. We use this approach sparingly to avoid community fatigue. Some communities, like the Next.js Discord, prohibit research recruitment entirely; be mindful of that and always respect each community's guidelines.

As a final option, direct outreach to individuals in our personal networks who match our research criteria for specific rounds.

"We're also planning to experiment with these additional recruitment channels:

Reaching out to qualified CodeYam waitlist subscribers who meet our research criteria

Targeted outreach to specific individuals who match our criteria and whose feedback would be particularly valuable

Fair warning, recruiting often feels HARD. That’s normal and it’s going to require consistent energy and effort. You’ll likely get progressively better with each iteration.

If you feel like you can’t find folks in your target demographic, that’s a yellow flag. You'll eventually need to reach these same customers once your product launches. Difficulty finding research participants should serve as a warning sign. Typically, finding and recruiting people in your target audience becomes harder, not easier, as you exhaust the low-hanging fruit.

Recent Example: CodeYam CraigsList Post in SF

One thing we’ve gone back-and-forth on in posting to Craigslist is how much to reveal in your post about who you’re looking for.

In previous research, we kept descriptions deliberately vague to prevent candidates from tailoring responses to match what they thought we wanted, rather than representing themselves accurately.

Recently, we've tested being more explicit about who we want to speak with, specifically using headlines that appeal directly to developers and technical leaders. Results have been mixed.

While this approach attracts more relevant candidates, we've encountered some who successfully gamed our screener, forcing us to cancel sessions at the last minute. We're improving our evaluation process, but it remains a work in progress. More on this approach in the next section.

Establish how you’ll screen responses

For CodeYam, we use Google Forms to collect screening responses, then export these to a spreadsheet to evaluate potential participants. This allows us to efficiently categorize candidates for either current or future research sessions.

Here’s our most recent screening survey: https://forms.gle/khKxsVPwQeT1UNbw8

We've reused variations of this survey a handful of times, initially setting specific research dates, then keeping the link active with broader timeframes. This allows us to continuously collect responses even after completing a specific research round.

A few additions we've found especially helpful in our screening process:

Requiring participants to provide a public social media handle (preferably LinkedIn) where we can verify their identity and background. While not perfect, as not all developers maintain social profiles, this has significantly improved participant quality. When applicants don't provide this information, they typically prove unsuitable for our studies.

Requiring participants to join research sessions using a laptop rather than mobile devices, which previously caused difficulties viewing prototypes.

Requiring participants to keep their camera on during sessions. This helps with identity verification and allows us to observe genuine reactions to our prototypes: confusion, excitement, etc. Recently, we caught someone who had appropriated another person's LinkedIn profile and biographical information; their true identity became immediately apparent once on camera.

Additionally, while your headline might state who you're looking for, the screener survey itself should avoid revealing your specific selection criteria. Making these too obvious encourages applicants to tailor their responses to what they think you want rather than representing themselves accurately. Surprisingly, we've encountered this issue multiple times even with seemingly straightforward screening questions.

Recently, we've noticed what appear to be AI-generated responses in our screening surveys. After initially being caught off guard, we identified patterns that made these formulaic submissions evident. While we may eventually use AI to assist with screening, our current volume is manageable enough for manual review.

Managing Recruiting and Scheduling

When reviewing screener responses with an established research schedule, I identify top candidates and match them to their available time slots. I typically create a dedicated scheduling tab within the same spreadsheet to organize this process.

Here’s an example (with fake data):

Once I know a person who I want to slot into a time slot, I’ll send them a personalized email using this template:

Recently, I've started using Superhuman's snippets functionality to streamline my email outreach. While I still manually update day/date/time information in the subject line and body, plus verify the recipient's name, these snippets significantly reduce the time spent drafting each invitation.

Alternatively, once you've sent this email once, you can simply copy the sent message into a new draft, updating only the recipient's email address, their name, and any time-specific information. This approach works well if you don't have access to email snippet-like templating functionality.

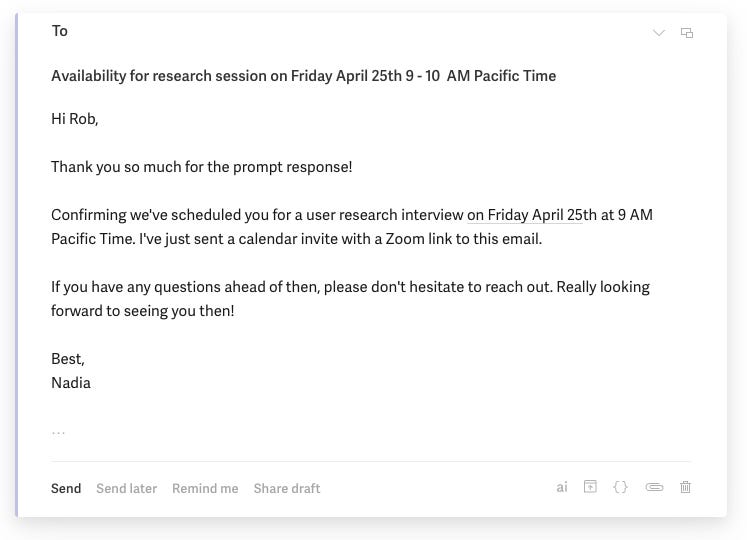

Once a participant confirms availability, I send them both a confirmation email and calendar invitation containing all the meeting details.

Here’s what the confirmation email looks like:

And here’s what the calendar invite looks like:

As soon as we establish session timeframes, I create calendar HOLD events and invite team members who will participate in the research sessions. Currently, our product designer Dani joins these sessions as an observer and note-taker. We also use Granola to generate an automated transcript and take meeting notes; in the past, we recorded sessions but found we never re-watched the recordings so that was overkill.

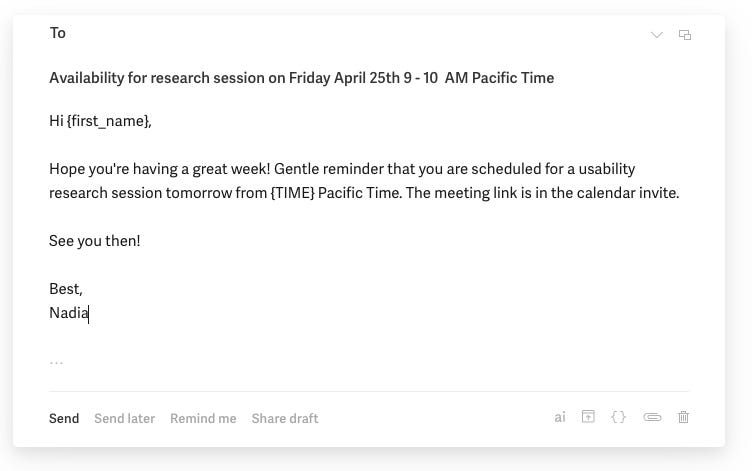

Finally, one day before the research session is scheduled to happen I’ll send a reminder email out. That looks like this:

I also verify that each participant has recently confirmed their attendance and ideally accepted the calendar invitation (though this isn't always the case).

TL;DR and Key Takeaways

Effective user research depends critically on recruiting, screening, and selecting the right participants. To succeed:

Define precisely who you want to interview and their key attributes.

Identify channels where your target participants already gather.

Implement thoughtful screening to identify truly qualified participants.

Develop a simple system for managing participant communication and follow-up.

Our current process blends automation with manual effort, and we continuously refine it.

Recruitment becomes particularly challenging when seeking participants with highly specific attributes—so recruit early and continuously, well before scheduling specific research slots.

I hope you find this helpful! Have feedback or questions? Please reach out.

You can also follow what we’re building at CodeYam more directly at blog.codeyam.com.